Sharing is important, not easy…

Complex listening situations have been described as switching between focusing on one talker and sharing attention within a group. How does Phonak perform against its competitors in this situation?

Beamformers help in noisy situations

Whether we are conscious of it or not, most of us switch multiple times a day, between sharing our attention between multiple sources and focusing our attention on one thing whilst blocking out surrounding distractions. A typical example in my life would be sharing my attention between my two kids. Like any Mum of multiple children, I try to share my attention equally between the two. But there are times, such as when one of them falls over or is having a meltdown, that I have to use my inbuilt beamformer to focus on one of them and block out everything else, just for a while. Another example is when I need to make a phone call whilst looking after the kids. I would mainly focus on the phone call whilst blocking out what’s going on around me, yet I would intermittently share my attention between the phone call and the kids to check that they are ok.

For our clients with hearing loss, noisy situations are always a challenge. Whilst listening to a friend in a busy restaurant, the automatic classifier of the hearing aid should detect that the situation consists of both noise and speech and it should activate the beamformer. The beamformer would then form a narrow beam in front whilst switching the microphones off behind, so that the listener can focus on the person in front.

When life gets even more complex

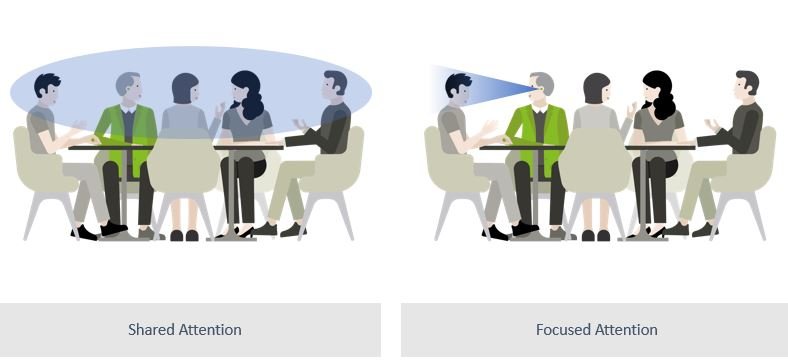

The above example works well for one-on-one conversations in a restaurant, but realistically life is full of more complex listening situations, where there may be several people sitting around talking in a noisy place. Based on models of cognitive psychology (Shinn-Cunningham, 2008), a typical group conversation is described as listeners constantly switching between shared and focused attention (figure 1). Listeners may initially share their attention amongst speakers within a group in order to decide which person they most want to listen to. Once they have decided, then they are likely to try to focus their attention on a single speaker and try to block out the other conversations and noise around them. However, they most likely will switch to sharing attention again later.

How do Phonak hearing aids perform in complex noisy situations?

In a recent study at the Hörzentrum Oldenburg, Germany, the automatic classifier from Phonak, AutoSense OS was compared to automatic systems from two competitors with regards to speech intelligibility and listening effort in these complex listening situations. A second objective was to determine whether there was any difference in performance when focusing attention on one talker and sharing attention between multiple talkers.

30 experienced hearing aid users with moderate hearing loss were fitted with Phonak Audéo B90-312T hearing aids (equivalent in performance to Audéo Marvel for the specific aspects tested in this study), plus premium hearing aids from two competitors. Phonak devices were programmed with AutoSense OS and the two competitive devices were also programmed with their automatic programs.

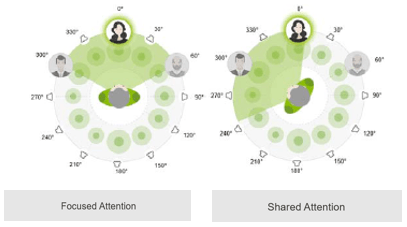

Participants sat in the center of 12 loudspeakers, facing the speaker at 0° and at 300° (-60°) (figure 2 left and right, respectively). Diffuse cafeteria noise was played from all 12 loudspeakers at a total level of 65 dB and speech material from the Oldenburg sentence test (OLSA) was played constantly and simultaneously from three loudspeakers at 0°, -60° and +60° with an SNR of 0 dB for each speaker. This scene represented a person sitting in a busy cafeteria speaking with three people.

Recordings were made with all three hearing aids while the participant faced the speaker at 0° and at -60°. The task of the participants was to directly compare the recordings of two hearing aids at a time (paired comparison) and rate speech intelligibility and listening effort.

Phonak outperforms competitors both when attention is focused and shared

The paired comparison tests showed that AutoSense OS leads to better rated speech intelligibility and reduced listening effort than two competitor devices, both when the speaker is directly in front (focused attention) and also if the speaker is at the side (shared attention). This means Phonak hearing aid wearers can benefit from better speech understanding with the least amount of listening effort, when in a typical complex listening situation with multiple talkers.

Read the full study or watch this short video.

References:

Shinn-Cunningham, B. (2008). Objective-based auditory and visual attention. Trends in Cognitive Sciences, 12(5), 182-186.