Phonak Infinio Ultra: Real time adaptation for high-quality sound in every situation

Leveraging 18 times more training data, Phonak’s AutoSense OS™ 7.0 has been retrained to enhance acoustic scene classification, enabling Infinio Ultra hearing aids to intelligently adapt to new sound environments in real time.

Everyday listening is rarely static. Your clients face a variety of sound environments, each with its own challenges that require quick adjustment. While the human brain naturally adapts to these constant changes, people with hearing loss often find it hard to keep up, making conversations tiring and sometimes overwhelming in busy settings.

This is where AI-driven sound scene classification in hearing aids makes a real difference. By recognizing the environment in real time (whether it involves speech, background noise, or music), hearing aids automatically adjust settings to provide clearer speech, more natural sound, less listening effort, and fewer manual program changes.

Let’s explore how this technology works, why it matters for our clients and how it supports seamless listening.

Why do we need AutoSense OS 7.0 and how does it work?

AutoSense is Phonak’s automatic operating system, the brain inside hearing aids that constantly listens to surroundings and instantly adjusts settings by activating the right program for optimal hearing.

As your client moves through different acoustic environments, from quiet rooms to noisy restaurants or concerts, AutoSense analyzes the soundscape continuously and smoothly switches between programs like Speech-in-Noise or Music mode without any input from the wearer.

The outcome? A natural hearing experience adapting seamlessly to life’s moments.

AutoSense uses machine learning with two key steps ensuring precision:

- Acoustic analysis & classification — Extracting parameters like modulation patterns pitch temporal cues from the environment compared against extensive training data.

- Real-time adaptation — Activating programs such as Speech-in-Noise or Music modes while engaging features like NoiseBlock™, StereoZoom 2.0™ or Spheric Speech Clarity™ depending on listening demands.

This lets users move effortlessly between diverse acoustic environments without needing manual adjustments.

At its core lies an advanced classifier trained on complex real-world sounds analyzing onset changes frequency distribution energy balance modulation patterns and signal-to-noise ratio to categorize distinct acoustic scenes (speech vs noise vs music). This clear separation ensures precise program activation focused on speech understanding and comfort even amid challenging noise scenarios.

Why does retraining AutoSense OS 7.0 with more data matter?

Retraining AutoSense OS with new recordings featuring diverse speakers noises and music genres eighteen times more training data than before helps better differentiate complex environments.

Two tricky conditions were prioritized:

- Speech-in-noise environment: Distinguishing when an environment is only noise (comfort is the priority) versus speech-in-noise (improving SNR is critical).

- Music: Music varies widely across genres, which can confuse classifiers. Retraining helps AutoSense OS identify music more reliably while preserving its natural fidelity.

Have you ever wondered why we don’t aim to recognize every single sound perfectly all the time? Real life isn’t perfect, it’s always changing. Sometimes acapella vocals can sound like speech, and sometimes background noise is so soft that it’s best left untouched.

If the system tried to force every moment into rigid categories in pursuit of “perfect” classification, it could degrade quality by cutting parts of favorite songs or over-processing gentle ambient sounds that do not bother your client. Instead AutoSense focuses on what matters most, clear conversations when needed, rich music enjoyment and comfortable listening everywhere else. It does not chase lab-perfect numbers but delivers perfectly relevant moments tailored to real-world needs.

How did we verify improvements with AutoSense OS 7.0 and what benefits does it bring?

1. Laboratory A/B Comparison1

We tested labeled samples representing speech-in-noise situations, calm environments and various music genres through devices running both AutoSense 6.0 and 7.0 under controlled conditions.

Using confusion matrices measuring correct recognition rates per category, we found that AutoSense 7.0 achieves 24% higher accuracy classifying music and speech-in-noise scenes compared to its predecessor.

2. Real-life datalogging verification1

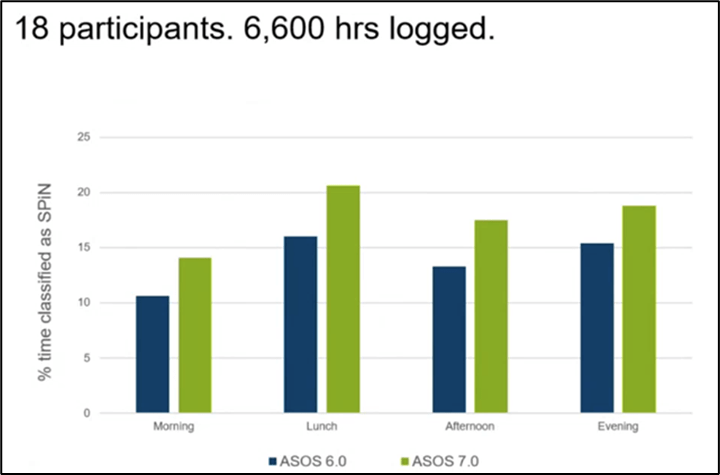

To see if this improvement translates into real life, we analyzed over 6600 hours of logged environmental data from eighteen participants wearing AutoSense 6.0 on one ear versus version 7.0 on the other during daily life activities.

Results showed that speech-in-noise programs activated more frequently and accurately with version 7.0 (see Figure 1). These activations aligned well with actual listening needs encountered throughout different times of day.

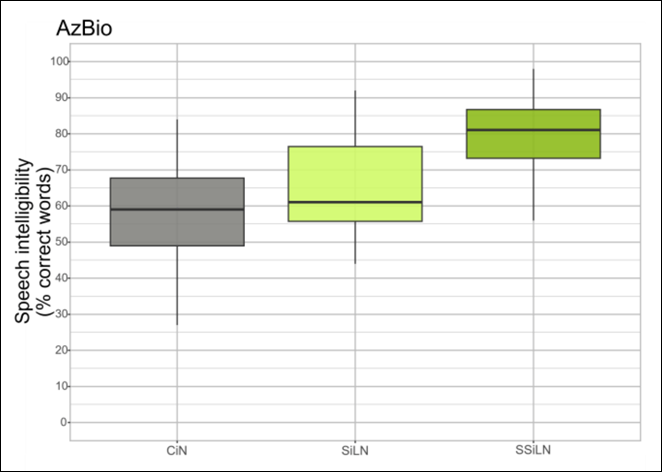

3. Speech understanding clinical study1

At Phonak Audiology Research Centre (PARC), 17 experienced hearing aid wearers participated in tests simulating real-world noisy conditions including speech amidst loud noise scenarios.

When AutoSense correctly classified these environments:

- Word recognition improved significantly

- Up to plus eight percentile points gain was seen using Speech-in-Loud-Noise mode

- Even greater gains plus twenty-two percentile points occurred when Spheric Speech-in-Loud-Noise was active.

These findings confirm that enhanced classification precision directly boosts communication performance across Comfort-in-Noise (CiN), Speech-in-Loud-Noise (SiLN), and Spheric Speech-in-Loud Noise (SSiLN).

Conclusion

For us as audiologists supporting clients navigating complex auditory worlds AutoSense OS™ 7.0 offers meaningful advancements where they matter most, clearer conversations amid noise plus richer musical experiences without compromising comfort or fidelity.

It is not about chasing perfect numbers but about delivering perfectly relevant moments that help our clients thrive socially and emotionally every day.

To learn more about Phonak Infinio Ultra, we invite you to visit our website.

References:

1. Sanchez, C., Giurda, R., Hobi, S., Preuss, M. (2025). AutoSense OS™ 7.0 adapts 24% more precisely to listening situations. Phonak Insight. Retrieved from: https://www.phonak.com/evidence, accessed November 2025.

2. Wright, A. et al. (2025). AutoSense OS 7.0 improves speech understanding with highly rated sound quality for challenging listening environments. Phonak Field Study News. Retrieved from: https://www.phonak.com/evidence, accessed November 2025.